2024-02-23 03:26:17 +03:00

|

|

|

// Copyright 2014 The Gogs Authors. All rights reserved.

|

|

|

|

|

// Copyright 2018 The Gitea Authors. All rights reserved.

|

|

|

|

|

// Copyright 2024 The Forgejo Authors. All rights reserved.

|

2018-01-05 13:56:52 +03:00

|

|

|

// All rights reserved.

|

2022-11-27 21:20:29 +03:00

|

|

|

// SPDX-License-Identifier: MIT

|

2014-03-24 14:25:15 +04:00

|

|

|

|

|

|

|

|

package repo

|

|

|

|

|

|

|

|

|

|

import (

|

2021-06-07 17:52:59 +03:00

|

|

|

"errors"

|

2016-10-12 16:28:51 +03:00

|

|

|

"fmt"

|

2021-11-16 21:18:25 +03:00

|

|

|

"html"

|

2019-12-16 09:20:25 +03:00

|

|

|

"net/http"

|

2021-11-16 21:18:25 +03:00

|

|

|

"net/url"

|

|

|

|

|

"strconv"

|

2015-08-08 17:43:14 +03:00

|

|

|

"strings"

|

2020-01-17 09:03:40 +03:00

|

|

|

"time"

|

2015-08-08 17:43:14 +03:00

|

|

|

|

2016-11-10 19:24:48 +03:00

|

|

|

"code.gitea.io/gitea/models"

|

2022-08-25 05:31:57 +03:00

|

|

|

activities_model "code.gitea.io/gitea/models/activities"

|

2021-09-24 14:32:56 +03:00

|

|

|

"code.gitea.io/gitea/models/db"

|

2022-06-12 18:51:54 +03:00

|

|

|

git_model "code.gitea.io/gitea/models/git"

|

2022-06-13 12:37:59 +03:00

|

|

|

issues_model "code.gitea.io/gitea/models/issues"

|

2022-03-29 09:29:02 +03:00

|

|

|

"code.gitea.io/gitea/models/organization"

|

2022-05-11 13:09:36 +03:00

|

|

|

access_model "code.gitea.io/gitea/models/perm/access"

|

2022-06-11 17:44:20 +03:00

|

|

|

pull_model "code.gitea.io/gitea/models/pull"

|

feat(quota): Quota enforcement

The previous commit laid out the foundation of the quota engine, this

one builds on top of it, and implements the actual enforcement.

Enforcement happens at the route decoration level, whenever possible. In

case of the API, when over quota, a 413 error is returned, with an

appropriate JSON payload. In case of web routes, a 413 HTML page is

rendered with similar information.

This implementation is for a **soft quota**: quota usage is checked

before an operation is to be performed, and the operation is *only*

denied if the user is already over quota. This makes it possible to go

over quota, but has the significant advantage of being practically

implementable within the current Forgejo architecture.

The goal of enforcement is to deny actions that can make the user go

over quota, and allow the rest. As such, deleting things should - in

almost all cases - be possible. A prime exemption is deleting files via

the web ui: that creates a new commit, which in turn increases repo

size, thus, is denied if the user is over quota.

Limitations

-----------

Because we generally work at a route decorator level, and rarely

look *into* the operation itself, `size:repos:public` and

`size:repos:private` are not enforced at this level, the engine enforces

against `size:repos:all`. This will be improved in the future.

AGit does not play very well with this system, because AGit PRs count

toward the repo they're opened against, while in the GitHub-style fork +

pull model, it counts against the fork. This too, can be improved in the

future.

There's very little done on the UI side to guard against going over

quota. What this patch implements, is enforcement, not prevention. The

UI will still let you *try* operations that *will* result in a denial.

Signed-off-by: Gergely Nagy <forgejo@gergo.csillger.hu>

2024-07-06 11:30:16 +03:00

|

|

|

quota_model "code.gitea.io/gitea/models/quota"

|

2021-12-10 04:27:50 +03:00

|

|

|

repo_model "code.gitea.io/gitea/models/repo"

|

2021-11-09 22:57:58 +03:00

|

|

|

"code.gitea.io/gitea/models/unit"

|

2021-11-24 12:49:20 +03:00

|

|

|

user_model "code.gitea.io/gitea/models/user"

|

2016-11-10 19:24:48 +03:00

|

|

|

"code.gitea.io/gitea/modules/base"

|

2024-02-25 01:34:51 +03:00

|

|

|

"code.gitea.io/gitea/modules/emoji"

|

2019-03-27 12:33:00 +03:00

|

|

|

"code.gitea.io/gitea/modules/git"

|

Simplify how git repositories are opened (#28937)

## Purpose

This is a refactor toward building an abstraction over managing git

repositories.

Afterwards, it does not matter anymore if they are stored on the local

disk or somewhere remote.

## What this PR changes

We used `git.OpenRepository` everywhere previously.

Now, we should split them into two distinct functions:

Firstly, there are temporary repositories which do not change:

```go

git.OpenRepository(ctx, diskPath)

```

Gitea managed repositories having a record in the database in the

`repository` table are moved into the new package `gitrepo`:

```go

gitrepo.OpenRepository(ctx, repo_model.Repo)

```

Why is `repo_model.Repository` the second parameter instead of file

path?

Because then we can easily adapt our repository storage strategy.

The repositories can be stored locally, however, they could just as well

be stored on a remote server.

## Further changes in other PRs

- A Git Command wrapper on package `gitrepo` could be created. i.e.

`NewCommand(ctx, repo_model.Repository, commands...)`. `git.RunOpts{Dir:

repo.RepoPath()}`, the directory should be empty before invoking this

method and it can be filled in the function only. #28940

- Remove the `RepoPath()`/`WikiPath()` functions to reduce the

possibility of mistakes.

---------

Co-authored-by: delvh <dev.lh@web.de>

2024-01-27 23:09:51 +03:00

|

|

|

"code.gitea.io/gitea/modules/gitrepo"

|

2022-09-02 10:58:49 +03:00

|

|

|

issue_template "code.gitea.io/gitea/modules/issue/template"

|

2016-11-10 19:24:48 +03:00

|

|

|

"code.gitea.io/gitea/modules/log"

|

2024-02-23 05:18:33 +03:00

|

|

|

"code.gitea.io/gitea/modules/optional"

|

2016-11-10 19:24:48 +03:00

|

|

|

"code.gitea.io/gitea/modules/setting"

|

2020-06-07 03:45:12 +03:00

|

|

|

"code.gitea.io/gitea/modules/structs"

|

2019-01-21 14:45:32 +03:00

|

|

|

"code.gitea.io/gitea/modules/util"

|

2021-01-26 18:36:53 +03:00

|

|

|

"code.gitea.io/gitea/modules/web"

|

2020-02-22 16:08:48 +03:00

|

|

|

"code.gitea.io/gitea/routers/utils"

|

2022-03-31 17:53:08 +03:00

|

|

|

asymkey_service "code.gitea.io/gitea/services/asymkey"

|

2022-06-11 17:44:20 +03:00

|

|

|

"code.gitea.io/gitea/services/automerge"

|

2024-02-27 10:12:22 +03:00

|

|

|

"code.gitea.io/gitea/services/context"

|

|

|

|

|

"code.gitea.io/gitea/services/context/upload"

|

2021-04-06 22:44:05 +03:00

|

|

|

"code.gitea.io/gitea/services/forms"

|

2019-09-06 05:20:09 +03:00

|

|

|

"code.gitea.io/gitea/services/gitdiff"

|

2023-09-05 21:37:47 +03:00

|

|

|

notify_service "code.gitea.io/gitea/services/notify"

|

2019-09-27 03:22:36 +03:00

|

|

|

pull_service "code.gitea.io/gitea/services/pull"

|

2019-10-26 09:54:11 +03:00

|

|

|

repo_service "code.gitea.io/gitea/services/repository"

|

2023-05-17 11:11:13 +03:00

|

|

|

|

|

|

|

|

"github.com/gobwas/glob"

|

2014-03-24 14:25:15 +04:00

|

|

|

)

|

|

|

|

|

|

2014-06-23 07:11:12 +04:00

|

|

|

const (

|

2016-11-24 10:04:31 +03:00

|

|

|

tplFork base.TplName = "repo/pulls/fork"

|

2019-06-07 23:29:29 +03:00

|

|

|

tplCompareDiff base.TplName = "repo/diff/compare"

|

2016-11-24 10:04:31 +03:00

|

|

|

tplPullCommits base.TplName = "repo/pulls/commits"

|

|

|

|

|

tplPullFiles base.TplName = "repo/pulls/files"

|

2016-02-18 01:21:31 +03:00

|

|

|

|

2016-11-24 10:04:31 +03:00

|

|

|

pullRequestTemplateKey = "PullRequestTemplate"

|

2016-02-18 01:21:31 +03:00

|

|

|

)

|

|

|

|

|

|

2022-01-20 20:46:10 +03:00

|

|

|

var pullRequestTemplateCandidates = []string{

|

|

|

|

|

"PULL_REQUEST_TEMPLATE.md",

|

2022-09-02 10:58:49 +03:00

|

|

|

"PULL_REQUEST_TEMPLATE.yaml",

|

|

|

|

|

"PULL_REQUEST_TEMPLATE.yml",

|

2022-01-20 20:46:10 +03:00

|

|

|

"pull_request_template.md",

|

2022-09-02 10:58:49 +03:00

|

|

|

"pull_request_template.yaml",

|

|

|

|

|

"pull_request_template.yml",

|

2023-08-25 23:49:17 +03:00

|

|

|

".forgejo/PULL_REQUEST_TEMPLATE.md",

|

|

|

|

|

".forgejo/PULL_REQUEST_TEMPLATE.yaml",

|

|

|

|

|

".forgejo/PULL_REQUEST_TEMPLATE.yml",

|

|

|

|

|

".forgejo/pull_request_template.md",

|

|

|

|

|

".forgejo/pull_request_template.yaml",

|

|

|

|

|

".forgejo/pull_request_template.yml",

|

2022-01-20 20:46:10 +03:00

|

|

|

".gitea/PULL_REQUEST_TEMPLATE.md",

|

2022-09-02 10:58:49 +03:00

|

|

|

".gitea/PULL_REQUEST_TEMPLATE.yaml",

|

|

|

|

|

".gitea/PULL_REQUEST_TEMPLATE.yml",

|

2022-01-20 20:46:10 +03:00

|

|

|

".gitea/pull_request_template.md",

|

2022-09-02 10:58:49 +03:00

|

|

|

".gitea/pull_request_template.yaml",

|

|

|

|

|

".gitea/pull_request_template.yml",

|

2022-01-20 20:46:10 +03:00

|

|

|

".github/PULL_REQUEST_TEMPLATE.md",

|

2022-09-02 10:58:49 +03:00

|

|

|

".github/PULL_REQUEST_TEMPLATE.yaml",

|

|

|

|

|

".github/PULL_REQUEST_TEMPLATE.yml",

|

2022-01-20 20:46:10 +03:00

|

|

|

".github/pull_request_template.md",

|

2022-09-02 10:58:49 +03:00

|

|

|

".github/pull_request_template.yaml",

|

|

|

|

|

".github/pull_request_template.yml",

|

2022-01-20 20:46:10 +03:00

|

|

|

}

|

2014-06-23 07:11:12 +04:00

|

|

|

|

2021-12-10 04:27:50 +03:00

|

|

|

func getRepository(ctx *context.Context, repoID int64) *repo_model.Repository {

|

2022-12-03 05:48:26 +03:00

|

|

|

repo, err := repo_model.GetRepositoryByID(ctx, repoID)

|

2015-08-08 12:10:34 +03:00

|

|

|

if err != nil {

|

2021-12-10 04:27:50 +03:00

|

|

|

if repo_model.IsErrRepoNotExist(err) {

|

2018-01-11 00:34:17 +03:00

|

|

|

ctx.NotFound("GetRepositoryByID", nil)

|

2015-08-08 12:10:34 +03:00

|

|

|

} else {

|

2018-01-11 00:34:17 +03:00

|

|

|

ctx.ServerError("GetRepositoryByID", err)

|

2015-08-08 12:10:34 +03:00

|

|

|

}

|

|

|

|

|

return nil

|

|

|

|

|

}

|

2015-09-01 18:57:02 +03:00

|

|

|

|

2022-05-11 13:09:36 +03:00

|

|

|

perm, err := access_model.GetUserRepoPermission(ctx, repo, ctx.Doer)

|

2018-11-28 14:26:14 +03:00

|

|

|

if err != nil {

|

|

|

|

|

ctx.ServerError("GetUserRepoPermission", err)

|

|

|

|

|

return nil

|

|

|

|

|

}

|

|

|

|

|

|

2021-11-09 22:57:58 +03:00

|

|

|

if !perm.CanRead(unit.TypeCode) {

|

2019-11-11 18:15:29 +03:00

|

|

|

log.Trace("Permission Denied: User %-v cannot read %-v of repo %-v\n"+

|

|

|

|

|

"User in repo has Permissions: %-+v",

|

2022-03-22 10:03:22 +03:00

|

|

|

ctx.Doer,

|

2021-11-09 22:57:58 +03:00

|

|

|

unit.TypeCode,

|

2019-11-11 18:15:29 +03:00

|

|

|

ctx.Repo,

|

|

|

|

|

perm)

|

|

|

|

|

ctx.NotFound("getRepository", nil)

|

|

|

|

|

return nil

|

|

|

|

|

}

|

|

|

|

|

return repo

|

|

|

|

|

}

|

|

|

|

|

|

2024-02-09 17:57:08 +03:00

|

|

|

func updateForkRepositoryInContext(ctx *context.Context, forkRepo *repo_model.Repository) bool {

|

|

|

|

|

if forkRepo == nil {

|

|

|

|

|

ctx.NotFound("No repository in context", nil)

|

|

|

|

|

return false

|

2019-11-11 18:15:29 +03:00

|

|

|

}

|

|

|

|

|

|

|

|

|

|

if forkRepo.IsEmpty {

|

|

|

|

|

log.Trace("Empty repository %-v", forkRepo)

|

2024-02-09 17:57:08 +03:00

|

|

|

ctx.NotFound("updateForkRepositoryInContext", nil)

|

|

|

|

|

return false

|

2015-09-01 18:57:02 +03:00

|

|

|

}

|

|

|

|

|

|

2023-02-18 15:11:03 +03:00

|

|

|

if err := forkRepo.LoadOwner(ctx); err != nil {

|

|

|

|

|

ctx.ServerError("LoadOwner", err)

|

2024-02-09 17:57:08 +03:00

|

|

|

return false

|

2015-08-08 12:10:34 +03:00

|

|

|

}

|

2020-06-07 03:45:12 +03:00

|

|

|

|

|

|

|

|

ctx.Data["repo_name"] = forkRepo.Name

|

|

|

|

|

ctx.Data["description"] = forkRepo.Description

|

|

|

|

|

ctx.Data["IsPrivate"] = forkRepo.IsPrivate || forkRepo.Owner.Visibility == structs.VisibleTypePrivate

|

2023-09-14 20:09:32 +03:00

|

|

|

canForkToUser := forkRepo.OwnerID != ctx.Doer.ID && !repo_model.HasForkedRepo(ctx, ctx.Doer.ID, forkRepo.ID)

|

2020-06-07 03:45:12 +03:00

|

|

|

|

2021-11-16 21:18:25 +03:00

|

|

|

ctx.Data["ForkRepo"] = forkRepo

|

2015-08-08 12:10:34 +03:00

|

|

|

|

2023-09-14 20:09:32 +03:00

|

|

|

ownedOrgs, err := organization.GetOrgsCanCreateRepoByUserID(ctx, ctx.Doer.ID)

|

2021-11-22 18:21:55 +03:00

|

|

|

if err != nil {

|

2021-11-25 08:03:03 +03:00

|

|

|

ctx.ServerError("GetOrgsCanCreateRepoByUserID", err)

|

2024-02-09 17:57:08 +03:00

|

|

|

return false

|

2015-08-08 12:10:34 +03:00

|

|

|

}

|

2022-03-29 09:29:02 +03:00

|

|

|

var orgs []*organization.Organization

|

2021-11-22 18:21:55 +03:00

|

|

|

for _, org := range ownedOrgs {

|

2023-09-14 20:09:32 +03:00

|

|

|

if forkRepo.OwnerID != org.ID && !repo_model.HasForkedRepo(ctx, org.ID, forkRepo.ID) {

|

2017-10-15 18:06:07 +03:00

|

|

|

orgs = append(orgs, org)

|

|

|

|

|

}

|

|

|

|

|

}

|

2017-11-06 07:12:55 +03:00

|

|

|

|

2022-01-20 20:46:10 +03:00

|

|

|

traverseParentRepo := forkRepo

|

2017-11-06 07:12:55 +03:00

|

|

|

for {

|

2022-03-22 10:03:22 +03:00

|

|

|

if ctx.Doer.ID == traverseParentRepo.OwnerID {

|

2017-11-06 07:12:55 +03:00

|

|

|

canForkToUser = false

|

|

|

|

|

} else {

|

|

|

|

|

for i, org := range orgs {

|

|

|

|

|

if org.ID == traverseParentRepo.OwnerID {

|

|

|

|

|

orgs = append(orgs[:i], orgs[i+1:]...)

|

|

|

|

|

break

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

if !traverseParentRepo.IsFork {

|

|

|

|

|

break

|

|

|

|

|

}

|

2022-12-03 05:48:26 +03:00

|

|

|

traverseParentRepo, err = repo_model.GetRepositoryByID(ctx, traverseParentRepo.ForkID)

|

2017-11-06 07:12:55 +03:00

|

|

|

if err != nil {

|

2018-01-11 00:34:17 +03:00

|

|

|

ctx.ServerError("GetRepositoryByID", err)

|

2024-02-09 17:57:08 +03:00

|

|

|

return false

|

2017-11-06 07:12:55 +03:00

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

ctx.Data["CanForkToUser"] = canForkToUser

|

2017-10-15 18:06:07 +03:00

|

|

|

ctx.Data["Orgs"] = orgs

|

|

|

|

|

|

|

|

|

|

if canForkToUser {

|

2022-03-22 10:03:22 +03:00

|

|

|

ctx.Data["ContextUser"] = ctx.Doer

|

2017-10-15 18:06:07 +03:00

|

|

|

} else if len(orgs) > 0 {

|

|

|

|

|

ctx.Data["ContextUser"] = orgs[0]

|

2023-07-14 10:56:20 +03:00

|

|

|

} else {

|

|

|

|

|

ctx.Data["CanForkRepo"] = false

|

2024-03-13 17:11:49 +03:00

|

|

|

ctx.RenderWithErr(ctx.Tr("repo.fork_no_valid_owners"), tplFork, nil)

|

2024-02-09 17:57:08 +03:00

|

|

|

return false

|

2017-10-15 18:06:07 +03:00

|

|

|

}

|

2015-08-08 12:10:34 +03:00

|

|

|

|

2023-09-29 04:48:39 +03:00

|

|

|

branches, err := git_model.FindBranchNames(ctx, git_model.FindBranchOptions{

|

|

|

|

|

RepoID: ctx.Repo.Repository.ID,

|

|

|

|

|

ListOptions: db.ListOptions{

|

|

|

|

|

ListAll: true,

|

|

|

|

|

},

|

2024-02-23 05:18:33 +03:00

|

|

|

IsDeletedBranch: optional.Some(false),

|

2023-09-29 04:48:39 +03:00

|

|

|

// Add it as the first option

|

|

|

|

|

ExcludeBranchNames: []string{ctx.Repo.Repository.DefaultBranch},

|

|

|

|

|

})

|

|

|

|

|

if err != nil {

|

|

|

|

|

ctx.ServerError("FindBranchNames", err)

|

2024-02-09 17:57:08 +03:00

|

|

|

return false

|

2023-09-29 04:48:39 +03:00

|

|

|

}

|

|

|

|

|

ctx.Data["Branches"] = append([]string{ctx.Repo.Repository.DefaultBranch}, branches...)

|

|

|

|

|

|

2024-02-09 17:57:08 +03:00

|

|

|

return true

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

// ForkByID redirects (with 301 Moved Permanently) to the repository's `/fork` page

|

|

|

|

|

func ForkByID(ctx *context.Context) {

|

|

|

|

|

ctx.Redirect(ctx.Repo.Repository.Link()+"/fork", http.StatusMovedPermanently)

|

2015-08-08 12:10:34 +03:00

|

|

|

}

|

|

|

|

|

|

2024-02-09 17:57:08 +03:00

|

|

|

// Fork renders the repository fork page

|

2016-03-11 19:56:52 +03:00

|

|

|

func Fork(ctx *context.Context) {

|

2015-08-08 12:10:34 +03:00

|

|

|

ctx.Data["Title"] = ctx.Tr("new_fork")

|

|

|

|

|

|

2022-12-28 00:21:14 +03:00

|

|

|

if ctx.Doer.CanForkRepo() {

|

|

|

|

|

ctx.Data["CanForkRepo"] = true

|

|

|

|

|

} else {

|

|

|

|

|

maxCreationLimit := ctx.Doer.MaxCreationLimit()

|

|

|

|

|

msg := ctx.TrN(maxCreationLimit, "repo.form.reach_limit_of_creation_1", "repo.form.reach_limit_of_creation_n", maxCreationLimit)

|

2023-07-15 11:52:03 +03:00

|

|

|

ctx.Flash.Error(msg, true)

|

2022-12-28 00:21:14 +03:00

|

|

|

}

|

|

|

|

|

|

2024-02-09 17:57:08 +03:00

|

|

|

if !updateForkRepositoryInContext(ctx, ctx.Repo.Repository) {

|

2015-08-08 12:10:34 +03:00

|

|

|

return

|

|

|

|

|

}

|

|

|

|

|

|

2021-04-05 18:30:52 +03:00

|

|

|

ctx.HTML(http.StatusOK, tplFork)

|

2015-08-08 12:10:34 +03:00

|

|

|

}

|

|

|

|

|

|

2016-11-24 10:04:31 +03:00

|

|

|

// ForkPost response for forking a repository

|

2021-01-26 18:36:53 +03:00

|

|

|

func ForkPost(ctx *context.Context) {

|

2021-04-06 22:44:05 +03:00

|

|

|

form := web.GetForm(ctx).(*forms.CreateRepoForm)

|

2015-08-08 12:10:34 +03:00

|

|

|

ctx.Data["Title"] = ctx.Tr("new_fork")

|

2023-04-03 17:11:05 +03:00

|

|

|

ctx.Data["CanForkRepo"] = true

|

2015-08-08 12:10:34 +03:00

|

|

|

|

2017-10-15 18:06:07 +03:00

|

|

|

ctxUser := checkContextUser(ctx, form.UID)

|

2015-08-08 12:10:34 +03:00

|

|

|

if ctx.Written() {

|

|

|

|

|

return

|

|

|

|

|

}

|

|

|

|

|

|

2024-02-09 17:57:08 +03:00

|

|

|

forkRepo := ctx.Repo.Repository

|

|

|

|

|

if !updateForkRepositoryInContext(ctx, forkRepo) {

|

2015-08-08 12:10:34 +03:00

|

|

|

return

|

|

|

|

|

}

|

2017-10-15 18:06:07 +03:00

|

|

|

|

2015-08-08 12:10:34 +03:00

|

|

|

ctx.Data["ContextUser"] = ctxUser

|

|

|

|

|

|

feat(quota): Quota enforcement

The previous commit laid out the foundation of the quota engine, this

one builds on top of it, and implements the actual enforcement.

Enforcement happens at the route decoration level, whenever possible. In

case of the API, when over quota, a 413 error is returned, with an

appropriate JSON payload. In case of web routes, a 413 HTML page is

rendered with similar information.

This implementation is for a **soft quota**: quota usage is checked

before an operation is to be performed, and the operation is *only*

denied if the user is already over quota. This makes it possible to go

over quota, but has the significant advantage of being practically

implementable within the current Forgejo architecture.

The goal of enforcement is to deny actions that can make the user go

over quota, and allow the rest. As such, deleting things should - in

almost all cases - be possible. A prime exemption is deleting files via

the web ui: that creates a new commit, which in turn increases repo

size, thus, is denied if the user is over quota.

Limitations

-----------

Because we generally work at a route decorator level, and rarely

look *into* the operation itself, `size:repos:public` and

`size:repos:private` are not enforced at this level, the engine enforces

against `size:repos:all`. This will be improved in the future.

AGit does not play very well with this system, because AGit PRs count

toward the repo they're opened against, while in the GitHub-style fork +

pull model, it counts against the fork. This too, can be improved in the

future.

There's very little done on the UI side to guard against going over

quota. What this patch implements, is enforcement, not prevention. The

UI will still let you *try* operations that *will* result in a denial.

Signed-off-by: Gergely Nagy <forgejo@gergo.csillger.hu>

2024-07-06 11:30:16 +03:00

|

|

|

if !ctx.CheckQuota(quota_model.LimitSubjectSizeReposAll, ctxUser.ID, ctxUser.Name) {

|

|

|

|

|

return

|

|

|

|

|

}

|

|

|

|

|

|

2015-08-08 12:10:34 +03:00

|

|

|

if ctx.HasError() {

|

2021-04-05 18:30:52 +03:00

|

|

|

ctx.HTML(http.StatusOK, tplFork)

|

2015-08-08 12:10:34 +03:00

|

|

|

return

|

|

|

|

|

}

|

|

|

|

|

|

2017-11-06 07:12:55 +03:00

|

|

|

var err error

|

2022-01-20 20:46:10 +03:00

|

|

|

traverseParentRepo := forkRepo

|

2017-11-06 07:12:55 +03:00

|

|

|

for {

|

|

|

|

|

if ctxUser.ID == traverseParentRepo.OwnerID {

|

|

|

|

|

ctx.RenderWithErr(ctx.Tr("repo.settings.new_owner_has_same_repo"), tplFork, &form)

|

|

|

|

|

return

|

|

|

|

|

}

|

2023-09-14 20:09:32 +03:00

|

|

|

repo := repo_model.GetForkedRepo(ctx, ctxUser.ID, traverseParentRepo.ID)

|

2021-11-22 18:21:55 +03:00

|

|

|

if repo != nil {

|

2021-11-16 21:18:25 +03:00

|

|

|

ctx.Redirect(ctxUser.HomeLink() + "/" + url.PathEscape(repo.Name))

|

2017-11-06 07:12:55 +03:00

|

|

|

return

|

|

|

|

|

}

|

|

|

|

|

if !traverseParentRepo.IsFork {

|

|

|

|

|

break

|

|

|

|

|

}

|

2022-12-03 05:48:26 +03:00

|

|

|

traverseParentRepo, err = repo_model.GetRepositoryByID(ctx, traverseParentRepo.ForkID)

|

2017-11-06 07:12:55 +03:00

|

|

|

if err != nil {

|

2018-01-11 00:34:17 +03:00

|

|

|

ctx.ServerError("GetRepositoryByID", err)

|

2017-11-06 07:12:55 +03:00

|

|

|

return

|

|

|

|

|

}

|

2017-07-26 10:17:38 +03:00

|

|

|

}

|

|

|

|

|

|

2021-11-25 08:03:03 +03:00

|

|

|

// Check if user is allowed to create repo's on the organization.

|

2015-08-08 12:10:34 +03:00

|

|

|

if ctxUser.IsOrganization() {

|

2023-10-03 13:30:41 +03:00

|

|

|

isAllowedToFork, err := organization.OrgFromUser(ctxUser).CanCreateOrgRepo(ctx, ctx.Doer.ID)

|

2017-12-21 10:43:26 +03:00

|

|

|

if err != nil {

|

2021-11-25 08:03:03 +03:00

|

|

|

ctx.ServerError("CanCreateOrgRepo", err)

|

2017-12-21 10:43:26 +03:00

|

|

|

return

|

2021-11-25 08:03:03 +03:00

|

|

|

} else if !isAllowedToFork {

|

2021-04-05 18:30:52 +03:00

|

|

|

ctx.Error(http.StatusForbidden)

|

2015-08-08 12:10:34 +03:00

|

|

|

return

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

2024-08-08 10:46:38 +03:00

|

|

|

repo, err := repo_service.ForkRepositoryAndUpdates(ctx, ctx.Doer, ctxUser, repo_service.ForkRepoOptions{

|

2023-09-29 04:48:39 +03:00

|

|

|

BaseRepo: forkRepo,

|

|

|

|

|

Name: form.RepoName,

|

|

|

|

|

Description: form.Description,

|

|

|

|

|

SingleBranch: form.ForkSingleBranch,

|

2021-08-28 11:37:14 +03:00

|

|

|

})

|

2015-08-08 12:10:34 +03:00

|

|

|

if err != nil {

|

2015-08-31 10:24:28 +03:00

|

|

|

ctx.Data["Err_RepoName"] = true

|

2015-08-08 12:10:34 +03:00

|

|

|

switch {

|

2022-12-28 00:21:14 +03:00

|

|

|

case repo_model.IsErrReachLimitOfRepo(err):

|

|

|

|

|

maxCreationLimit := ctxUser.MaxCreationLimit()

|

|

|

|

|

msg := ctx.TrN(maxCreationLimit, "repo.form.reach_limit_of_creation_1", "repo.form.reach_limit_of_creation_n", maxCreationLimit)

|

|

|

|

|

ctx.RenderWithErr(msg, tplFork, &form)

|

2021-12-12 18:48:20 +03:00

|

|

|

case repo_model.IsErrRepoAlreadyExist(err):

|

2016-11-24 10:04:31 +03:00

|

|

|

ctx.RenderWithErr(ctx.Tr("repo.settings.new_owner_has_same_repo"), tplFork, &form)

|

2023-05-22 13:21:46 +03:00

|

|

|

case repo_model.IsErrRepoFilesAlreadyExist(err):

|

|

|

|

|

switch {

|

|

|

|

|

case ctx.IsUserSiteAdmin() || (setting.Repository.AllowAdoptionOfUnadoptedRepositories && setting.Repository.AllowDeleteOfUnadoptedRepositories):

|

|

|

|

|

ctx.RenderWithErr(ctx.Tr("form.repository_files_already_exist.adopt_or_delete"), tplFork, form)

|

|

|

|

|

case setting.Repository.AllowAdoptionOfUnadoptedRepositories:

|

|

|

|

|

ctx.RenderWithErr(ctx.Tr("form.repository_files_already_exist.adopt"), tplFork, form)

|

|

|

|

|

case setting.Repository.AllowDeleteOfUnadoptedRepositories:

|

|

|

|

|

ctx.RenderWithErr(ctx.Tr("form.repository_files_already_exist.delete"), tplFork, form)

|

|

|

|

|

default:

|

|

|

|

|

ctx.RenderWithErr(ctx.Tr("form.repository_files_already_exist"), tplFork, form)

|

|

|

|

|

}

|

2021-11-24 12:49:20 +03:00

|

|

|

case db.IsErrNameReserved(err):

|

|

|

|

|

ctx.RenderWithErr(ctx.Tr("repo.form.name_reserved", err.(db.ErrNameReserved).Name), tplFork, &form)

|

|

|

|

|

case db.IsErrNamePatternNotAllowed(err):

|

|

|

|

|

ctx.RenderWithErr(ctx.Tr("repo.form.name_pattern_not_allowed", err.(db.ErrNamePatternNotAllowed).Pattern), tplFork, &form)

|

2015-08-08 12:10:34 +03:00

|

|

|

default:

|

2018-01-11 00:34:17 +03:00

|

|

|

ctx.ServerError("ForkPost", err)

|

2015-08-08 12:10:34 +03:00

|

|

|

}

|

|

|

|

|

return

|

|

|

|

|

}

|

|

|

|

|

|

2015-08-08 17:43:14 +03:00

|

|

|

log.Trace("Repository forked[%d]: %s/%s", forkRepo.ID, ctxUser.Name, repo.Name)

|

2021-11-16 21:18:25 +03:00

|

|

|

ctx.Redirect(ctxUser.HomeLink() + "/" + url.PathEscape(repo.Name))

|

2015-08-08 12:10:34 +03:00

|

|

|

}

|

|

|

|

|

|

2023-08-07 06:43:18 +03:00

|

|

|

func getPullInfo(ctx *context.Context) (issue *issues_model.Issue, ok bool) {

|

2023-07-22 17:14:27 +03:00

|

|

|

issue, err := issues_model.GetIssueByIndex(ctx, ctx.Repo.Repository.ID, ctx.ParamsInt64(":index"))

|

2015-09-02 11:08:05 +03:00

|

|

|

if err != nil {

|

2022-06-13 12:37:59 +03:00

|

|

|

if issues_model.IsErrIssueNotExist(err) {

|

2018-01-11 00:34:17 +03:00

|

|

|

ctx.NotFound("GetIssueByIndex", err)

|

2015-09-02 11:08:05 +03:00

|

|

|

} else {

|

2018-01-11 00:34:17 +03:00

|

|

|

ctx.ServerError("GetIssueByIndex", err)

|

2015-09-02 11:08:05 +03:00

|

|

|

}

|

2023-08-07 06:43:18 +03:00

|

|

|

return nil, false

|

2015-09-02 11:08:05 +03:00

|

|

|

}

|

2022-11-19 11:12:33 +03:00

|

|

|

if err = issue.LoadPoster(ctx); err != nil {

|

2018-12-13 18:55:43 +03:00

|

|

|

ctx.ServerError("LoadPoster", err)

|

2023-08-07 06:43:18 +03:00

|

|

|

return nil, false

|

2018-12-13 18:55:43 +03:00

|

|

|

}

|

2022-04-08 12:11:15 +03:00

|

|

|

if err := issue.LoadRepo(ctx); err != nil {

|

2019-10-23 20:54:13 +03:00

|

|

|

ctx.ServerError("LoadRepo", err)

|

2023-08-07 06:43:18 +03:00

|

|

|

return nil, false

|

2019-10-23 20:54:13 +03:00

|

|

|

}

|

2024-02-25 01:34:51 +03:00

|

|

|

ctx.Data["Title"] = fmt.Sprintf("#%d - %s", issue.Index, emoji.ReplaceAliases(issue.Title))

|

2015-10-19 02:30:39 +03:00

|

|

|

ctx.Data["Issue"] = issue

|

2015-09-02 11:08:05 +03:00

|

|

|

|

2015-10-19 02:30:39 +03:00

|

|

|

if !issue.IsPull {

|

2018-01-11 00:34:17 +03:00

|

|

|

ctx.NotFound("ViewPullCommits", nil)

|

2023-08-07 06:43:18 +03:00

|

|

|

return nil, false

|

2015-09-02 11:08:05 +03:00

|

|

|

}

|

|

|

|

|

|

2022-11-19 11:12:33 +03:00

|

|

|

if err = issue.LoadPullRequest(ctx); err != nil {

|

2018-12-13 18:55:43 +03:00

|

|

|

ctx.ServerError("LoadPullRequest", err)

|

2023-08-07 06:43:18 +03:00

|

|

|

return nil, false

|

2018-12-13 18:55:43 +03:00

|

|

|

}

|

|

|

|

|

|

2022-11-19 11:12:33 +03:00

|

|

|

if err = issue.PullRequest.LoadHeadRepo(ctx); err != nil {

|

2020-03-03 01:31:55 +03:00

|

|

|

ctx.ServerError("LoadHeadRepo", err)

|

2023-08-07 06:43:18 +03:00

|

|

|

return nil, false

|

2015-09-02 11:08:05 +03:00

|

|

|

}

|

|

|

|

|

|

|

|

|

|

if ctx.IsSigned {

|

|

|

|

|

// Update issue-user.

|

2022-08-25 05:31:57 +03:00

|

|

|

if err = activities_model.SetIssueReadBy(ctx, issue.ID, ctx.Doer.ID); err != nil {

|

2018-01-11 00:34:17 +03:00

|

|

|

ctx.ServerError("ReadBy", err)

|

2023-08-07 06:43:18 +03:00

|

|

|

return nil, false

|

2015-09-02 11:08:05 +03:00

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

2023-08-07 06:43:18 +03:00

|

|

|

return issue, true

|

2015-09-02 11:08:05 +03:00

|

|

|

}

|

|

|

|

|

|

2022-06-13 12:37:59 +03:00

|

|

|

func setMergeTarget(ctx *context.Context, pull *issues_model.PullRequest) {

|

2022-11-19 11:12:33 +03:00

|

|

|

if ctx.Repo.Owner.Name == pull.MustHeadUserName(ctx) {

|

2017-10-04 20:35:01 +03:00

|

|

|

ctx.Data["HeadTarget"] = pull.HeadBranch

|

|

|

|

|

} else if pull.HeadRepo == nil {

|

2022-11-19 11:12:33 +03:00

|

|

|

ctx.Data["HeadTarget"] = pull.MustHeadUserName(ctx) + ":" + pull.HeadBranch

|

2017-10-04 20:35:01 +03:00

|

|

|

} else {

|

2022-11-19 11:12:33 +03:00

|

|

|

ctx.Data["HeadTarget"] = pull.MustHeadUserName(ctx) + "/" + pull.HeadRepo.Name + ":" + pull.HeadBranch

|

2017-10-04 20:35:01 +03:00

|

|

|

}

|

2024-02-23 03:26:17 +03:00

|

|

|

|

|

|

|

|

if pull.Flow == issues_model.PullRequestFlowAGit {

|

|

|

|

|

ctx.Data["MadeUsingAGit"] = true

|

|

|

|

|

}

|

|

|

|

|

|

2017-10-04 20:35:01 +03:00

|

|

|

ctx.Data["BaseTarget"] = pull.BaseBranch

|

2023-10-11 07:24:07 +03:00

|

|

|

ctx.Data["HeadBranchLink"] = pull.GetHeadBranchLink(ctx)

|

|

|

|

|

ctx.Data["BaseBranchLink"] = pull.GetBaseBranchLink(ctx)

|

2017-10-04 20:35:01 +03:00

|

|

|

}

|

|

|

|

|

|

2023-07-03 04:00:28 +03:00

|

|

|

// GetPullDiffStats get Pull Requests diff stats

|

|

|

|

|

func GetPullDiffStats(ctx *context.Context) {

|

2023-08-07 06:43:18 +03:00

|

|

|

issue, ok := getPullInfo(ctx)

|

|

|

|

|

if !ok {

|

|

|

|

|

return

|

|

|

|

|

}

|

2016-08-16 20:19:09 +03:00

|

|

|

pull := issue.PullRequest

|

2015-09-02 16:26:56 +03:00

|

|

|

|

2023-07-03 04:00:28 +03:00

|

|

|

mergeBaseCommitID := GetMergedBaseCommitID(ctx, issue)

|

|

|

|

|

|

2023-08-07 06:43:18 +03:00

|

|

|

if mergeBaseCommitID == "" {

|

2023-07-03 04:00:28 +03:00

|

|

|

ctx.NotFound("PullFiles", nil)

|

|

|

|

|

return

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

headCommitID, err := ctx.Repo.GitRepo.GetRefCommitID(pull.GetGitRefName())

|

|

|

|

|

if err != nil {

|

|

|

|

|

ctx.ServerError("GetRefCommitID", err)

|

|

|

|

|

return

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

diffOptions := &gitdiff.DiffOptions{

|

|

|

|

|

BeforeCommitID: mergeBaseCommitID,

|

|

|

|

|

AfterCommitID: headCommitID,

|

|

|

|

|

MaxLines: setting.Git.MaxGitDiffLines,

|

|

|

|

|

MaxLineCharacters: setting.Git.MaxGitDiffLineCharacters,

|

|

|

|

|

MaxFiles: setting.Git.MaxGitDiffFiles,

|

|

|

|

|

WhitespaceBehavior: gitdiff.GetWhitespaceFlag(ctx.Data["WhitespaceBehavior"].(string)),

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

diff, err := gitdiff.GetPullDiffStats(ctx.Repo.GitRepo, diffOptions)

|

|

|

|

|

if err != nil {

|

|

|

|

|

ctx.ServerError("GetPullDiffStats", err)

|

|

|

|

|

return

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

ctx.Data["Diff"] = diff

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

func GetMergedBaseCommitID(ctx *context.Context, issue *issues_model.Issue) string {

|

|

|

|

|

pull := issue.PullRequest

|

2017-10-04 20:35:01 +03:00

|

|

|

|

2021-12-23 11:32:29 +03:00

|

|

|

var baseCommit string

|

|

|

|

|

// Some migrated PR won't have any Base SHA and lose history, try to get one

|

|

|

|

|

if pull.MergeBase == "" {

|

|

|

|

|

var commitSHA, parentCommit string

|

|

|

|

|

// If there is a head or a patch file, and it is readable, grab info

|

2021-12-23 16:44:00 +03:00

|

|

|

commitSHA, err := ctx.Repo.GitRepo.GetRefCommitID(pull.GetGitRefName())

|

2021-12-23 11:32:29 +03:00

|

|

|

if err != nil {

|

|

|

|

|

// Head File does not exist, try the patch

|

|

|

|

|

commitSHA, err = ctx.Repo.GitRepo.ReadPatchCommit(pull.Index)

|

|

|

|

|

if err == nil {

|

|

|

|

|

// Recreate pull head in files for next time

|

2021-12-23 16:44:00 +03:00

|

|

|

if err := ctx.Repo.GitRepo.SetReference(pull.GetGitRefName(), commitSHA); err != nil {

|

2021-12-23 11:32:29 +03:00

|

|

|

log.Error("Could not write head file", err)

|

|

|

|

|

}

|

|

|

|

|

} else {

|

|

|

|

|

// There is no history available

|

|

|

|

|

log.Trace("No history file available for PR %d", pull.Index)

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

if commitSHA != "" {

|

|

|

|

|

// Get immediate parent of the first commit in the patch, grab history back

|

2022-10-23 17:44:45 +03:00

|

|

|

parentCommit, _, err = git.NewCommand(ctx, "rev-list", "-1", "--skip=1").AddDynamicArguments(commitSHA).RunStdString(&git.RunOpts{Dir: ctx.Repo.GitRepo.Path})

|

2021-12-23 11:32:29 +03:00

|

|

|

if err == nil {

|

|

|

|

|

parentCommit = strings.TrimSpace(parentCommit)

|

|

|

|

|

}

|

|

|

|

|

// Special case on Git < 2.25 that doesn't fail on immediate empty history

|

|

|

|

|

if err != nil || parentCommit == "" {

|

|

|

|

|

log.Info("No known parent commit for PR %d, error: %v", pull.Index, err)

|

|

|

|

|

// bring at least partial history if it can work

|

|

|

|

|

parentCommit = commitSHA

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

baseCommit = parentCommit

|

|

|

|

|

} else {

|

|

|

|

|

// Keep an empty history or original commit

|

|

|

|

|

baseCommit = pull.MergeBase

|

|

|

|

|

}

|

|

|

|

|

|

2023-07-03 04:00:28 +03:00

|

|

|

return baseCommit

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

// PrepareMergedViewPullInfo show meta information for a merged pull request view page

|

|

|

|

|

func PrepareMergedViewPullInfo(ctx *context.Context, issue *issues_model.Issue) *git.CompareInfo {

|

|

|

|

|

pull := issue.PullRequest

|

|

|

|

|

|

|

|

|

|

setMergeTarget(ctx, pull)

|

|

|

|

|

ctx.Data["HasMerged"] = true

|

|

|

|

|

|

|

|

|

|

baseCommit := GetMergedBaseCommitID(ctx, issue)

|

|

|

|

|

|

2019-06-12 02:32:08 +03:00

|

|

|

compareInfo, err := ctx.Repo.GitRepo.GetCompareInfo(ctx.Repo.Repository.RepoPath(),

|

2022-01-18 10:45:43 +03:00

|

|

|

baseCommit, pull.GetGitRefName(), false, false)

|

2015-09-02 16:26:56 +03:00

|

|

|

if err != nil {

|

2020-10-20 15:52:54 +03:00

|

|

|

if strings.Contains(err.Error(), "fatal: Not a valid object name") || strings.Contains(err.Error(), "unknown revision or path not in the working tree") {

|

2018-08-01 06:00:35 +03:00

|

|

|

ctx.Data["IsPullRequestBroken"] = true

|

2020-03-03 01:31:55 +03:00

|

|

|

ctx.Data["BaseTarget"] = pull.BaseBranch

|

2018-01-19 09:18:51 +03:00

|

|

|

ctx.Data["NumCommits"] = 0

|

|

|

|

|

ctx.Data["NumFiles"] = 0

|

|

|

|

|

return nil

|

|

|

|

|

}

|

|

|

|

|

|

2019-06-07 23:29:29 +03:00

|

|

|

ctx.ServerError("GetCompareInfo", err)

|

2018-01-19 09:18:51 +03:00

|

|

|

return nil

|

2015-09-02 16:26:56 +03:00

|

|

|

}

|

2021-08-09 21:08:51 +03:00

|

|

|

ctx.Data["NumCommits"] = len(compareInfo.Commits)

|

2019-06-12 02:32:08 +03:00

|

|

|

ctx.Data["NumFiles"] = compareInfo.NumFiles

|

2020-12-18 15:37:55 +03:00

|

|

|

|

2021-08-09 21:08:51 +03:00

|

|

|

if len(compareInfo.Commits) != 0 {

|

|

|

|

|

sha := compareInfo.Commits[0].ID.String()

|

2024-03-22 15:53:52 +03:00

|

|

|

commitStatuses, _, err := git_model.GetLatestCommitStatus(ctx, ctx.Repo.Repository.ID, sha, db.ListOptionsAll)

|

2020-12-18 15:37:55 +03:00

|

|

|

if err != nil {

|

|

|

|

|

ctx.ServerError("GetLatestCommitStatus", err)

|

|

|

|

|

return nil

|

|

|

|

|

}

|

2024-07-28 18:11:40 +03:00

|

|

|

if !ctx.Repo.CanRead(unit.TypeActions) {

|

|

|

|

|

git_model.CommitStatusesHideActionsURL(ctx, commitStatuses)

|

|

|

|

|

}

|

|

|

|

|

|

2020-12-18 15:37:55 +03:00

|

|

|

if len(commitStatuses) != 0 {

|

|

|

|

|

ctx.Data["LatestCommitStatuses"] = commitStatuses

|

2022-06-12 18:51:54 +03:00

|

|

|

ctx.Data["LatestCommitStatus"] = git_model.CalcCommitStatus(commitStatuses)

|

2020-12-18 15:37:55 +03:00

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

2019-06-12 02:32:08 +03:00

|

|

|

return compareInfo

|

2015-09-02 16:26:56 +03:00

|

|

|

}

|

|

|

|

|

|

2016-11-24 10:04:31 +03:00

|

|

|

// PrepareViewPullInfo show meta information for a pull request preview page

|

2022-06-13 12:37:59 +03:00

|

|

|

func PrepareViewPullInfo(ctx *context.Context, issue *issues_model.Issue) *git.CompareInfo {

|

2022-02-11 11:02:53 +03:00

|

|

|

ctx.Data["PullRequestWorkInProgressPrefixes"] = setting.Repository.PullRequest.WorkInProgressPrefixes

|

|

|

|

|

|

2015-09-02 11:08:05 +03:00

|

|

|

repo := ctx.Repo.Repository

|

2016-08-16 20:19:09 +03:00

|

|

|

pull := issue.PullRequest

|

2015-09-02 11:08:05 +03:00

|

|

|

|

2022-11-19 11:12:33 +03:00

|

|

|

if err := pull.LoadHeadRepo(ctx); err != nil {

|

2020-03-03 01:31:55 +03:00

|

|

|

ctx.ServerError("LoadHeadRepo", err)

|

2015-10-24 10:36:47 +03:00

|

|

|

return nil

|

|

|

|

|

}

|

|

|

|

|

|

2022-11-19 11:12:33 +03:00

|

|

|

if err := pull.LoadBaseRepo(ctx); err != nil {

|

2020-03-03 01:31:55 +03:00

|

|

|

ctx.ServerError("LoadBaseRepo", err)

|

2020-01-07 20:06:14 +03:00

|

|

|

return nil

|

|

|

|

|

}

|

|

|

|

|

|

2017-10-04 20:35:01 +03:00

|

|

|

setMergeTarget(ctx, pull)

|

|

|

|

|

|

2023-01-16 11:00:22 +03:00

|

|

|

pb, err := git_model.GetFirstMatchProtectedBranchRule(ctx, repo.ID, pull.BaseBranch)

|

|

|

|

|

if err != nil {

|

2020-01-17 09:03:40 +03:00

|

|

|

ctx.ServerError("LoadProtectedBranch", err)

|

2019-09-18 08:39:45 +03:00

|

|

|

return nil

|

|

|

|

|

}

|

2023-01-16 11:00:22 +03:00

|

|

|

ctx.Data["EnableStatusCheck"] = pb != nil && pb.EnableStatusCheck

|

2019-09-18 08:39:45 +03:00

|

|

|

|

2022-01-20 02:26:57 +03:00

|

|

|

var baseGitRepo *git.Repository

|

|

|

|

|

if pull.BaseRepoID == ctx.Repo.Repository.ID && ctx.Repo.GitRepo != nil {

|

|

|

|

|

baseGitRepo = ctx.Repo.GitRepo

|

|

|

|

|

} else {

|

Simplify how git repositories are opened (#28937)

## Purpose

This is a refactor toward building an abstraction over managing git

repositories.

Afterwards, it does not matter anymore if they are stored on the local

disk or somewhere remote.

## What this PR changes

We used `git.OpenRepository` everywhere previously.

Now, we should split them into two distinct functions:

Firstly, there are temporary repositories which do not change:

```go

git.OpenRepository(ctx, diskPath)

```

Gitea managed repositories having a record in the database in the

`repository` table are moved into the new package `gitrepo`:

```go

gitrepo.OpenRepository(ctx, repo_model.Repo)

```

Why is `repo_model.Repository` the second parameter instead of file

path?

Because then we can easily adapt our repository storage strategy.

The repositories can be stored locally, however, they could just as well

be stored on a remote server.

## Further changes in other PRs

- A Git Command wrapper on package `gitrepo` could be created. i.e.

`NewCommand(ctx, repo_model.Repository, commands...)`. `git.RunOpts{Dir:

repo.RepoPath()}`, the directory should be empty before invoking this

method and it can be filled in the function only. #28940

- Remove the `RepoPath()`/`WikiPath()` functions to reduce the

possibility of mistakes.

---------

Co-authored-by: delvh <dev.lh@web.de>

2024-01-27 23:09:51 +03:00

|

|

|

baseGitRepo, err := gitrepo.OpenRepository(ctx, pull.BaseRepo)

|

2022-01-20 02:26:57 +03:00

|

|

|

if err != nil {

|

|

|

|

|

ctx.ServerError("OpenRepository", err)

|

|

|

|

|

return nil

|

|

|

|

|

}

|

|

|

|

|

defer baseGitRepo.Close()

|

2020-01-07 20:06:14 +03:00

|

|

|

}

|

2020-03-05 21:51:21 +03:00

|

|

|

|

|

|

|

|

if !baseGitRepo.IsBranchExist(pull.BaseBranch) {

|

|

|

|

|

ctx.Data["IsPullRequestBroken"] = true

|

|

|

|

|

ctx.Data["BaseTarget"] = pull.BaseBranch

|

|

|

|

|

ctx.Data["HeadTarget"] = pull.HeadBranch

|

2020-03-31 16:42:44 +03:00

|

|

|

|

|

|

|

|

sha, err := baseGitRepo.GetRefCommitID(pull.GetGitRefName())

|

|

|

|

|

if err != nil {

|

|

|

|

|

ctx.ServerError(fmt.Sprintf("GetRefCommitID(%s)", pull.GetGitRefName()), err)

|

|

|

|

|

return nil

|

|

|

|

|

}

|

2024-03-22 15:53:52 +03:00

|

|

|

commitStatuses, _, err := git_model.GetLatestCommitStatus(ctx, repo.ID, sha, db.ListOptionsAll)

|

2020-03-31 16:42:44 +03:00

|

|

|

if err != nil {

|

|

|

|

|

ctx.ServerError("GetLatestCommitStatus", err)

|

|

|

|

|

return nil

|

|

|

|

|

}

|

2024-07-28 18:11:40 +03:00

|

|

|

if !ctx.Repo.CanRead(unit.TypeActions) {

|

|

|

|

|

git_model.CommitStatusesHideActionsURL(ctx, commitStatuses)

|

|

|

|

|

}

|

|

|

|

|

|

2020-03-31 16:42:44 +03:00

|

|

|

if len(commitStatuses) > 0 {

|

|

|

|

|

ctx.Data["LatestCommitStatuses"] = commitStatuses

|

2022-06-12 18:51:54 +03:00

|

|

|

ctx.Data["LatestCommitStatus"] = git_model.CalcCommitStatus(commitStatuses)

|

2020-03-31 16:42:44 +03:00

|

|

|

}

|

|

|

|

|

|

|

|

|

|

compareInfo, err := baseGitRepo.GetCompareInfo(pull.BaseRepo.RepoPath(),

|

2022-01-18 10:45:43 +03:00

|

|

|

pull.MergeBase, pull.GetGitRefName(), false, false)

|

2020-03-31 16:42:44 +03:00

|

|

|

if err != nil {

|

|

|

|

|

if strings.Contains(err.Error(), "fatal: Not a valid object name") {

|

|

|

|

|

ctx.Data["IsPullRequestBroken"] = true

|

|

|

|

|

ctx.Data["BaseTarget"] = pull.BaseBranch

|

|

|

|

|

ctx.Data["NumCommits"] = 0

|

|

|

|

|

ctx.Data["NumFiles"] = 0

|

|

|

|

|

return nil

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

ctx.ServerError("GetCompareInfo", err)

|

|

|

|

|

return nil

|

|

|

|

|

}

|

|

|

|

|

|

2021-08-09 21:08:51 +03:00

|

|

|

ctx.Data["NumCommits"] = len(compareInfo.Commits)

|

2020-03-31 16:42:44 +03:00

|

|

|

ctx.Data["NumFiles"] = compareInfo.NumFiles

|

|

|

|

|

return compareInfo

|

2020-03-05 21:51:21 +03:00

|

|

|

}

|

|

|

|

|

|

2019-06-30 10:57:59 +03:00

|

|

|

var headBranchExist bool

|

2020-01-07 20:06:14 +03:00

|

|

|

var headBranchSha string

|

2019-06-30 10:57:59 +03:00

|

|

|

// HeadRepo may be missing

|

2015-10-05 03:54:06 +03:00

|

|

|

if pull.HeadRepo != nil {

|

2024-11-02 06:29:37 +03:00

|

|

|

headGitRepo, closer, err := gitrepo.RepositoryFromContextOrOpen(ctx, pull.HeadRepo)

|

2015-10-05 03:54:06 +03:00

|

|

|

if err != nil {

|

2024-11-02 06:29:37 +03:00

|

|

|

ctx.ServerError("RepositoryFromContextOrOpen", err)

|

2015-10-05 03:54:06 +03:00

|

|

|

return nil

|

|

|

|

|

}

|

2024-11-02 06:29:37 +03:00

|

|

|

defer closer.Close()

|

2019-06-30 10:57:59 +03:00

|

|

|

|

2022-06-13 12:37:59 +03:00

|

|

|

if pull.Flow == issues_model.PullRequestFlowGithub {

|

2021-07-28 12:42:56 +03:00

|

|

|

headBranchExist = headGitRepo.IsBranchExist(pull.HeadBranch)

|

|

|

|

|

} else {

|

2021-11-30 23:06:32 +03:00

|

|

|

headBranchExist = git.IsReferenceExist(ctx, baseGitRepo.Path, pull.GetGitRefName())

|

2021-07-28 12:42:56 +03:00

|

|

|

}

|

2019-06-30 10:57:59 +03:00

|

|

|

|

|

|

|

|

if headBranchExist {

|

2022-06-13 12:37:59 +03:00

|

|

|

if pull.Flow != issues_model.PullRequestFlowGithub {

|

2021-07-28 12:42:56 +03:00

|

|

|

headBranchSha, err = baseGitRepo.GetRefCommitID(pull.GetGitRefName())

|

|

|

|

|

} else {

|

|

|

|

|

headBranchSha, err = headGitRepo.GetBranchCommitID(pull.HeadBranch)

|

|

|

|

|

}

|

2019-06-30 10:57:59 +03:00

|

|

|

if err != nil {

|

|

|

|

|

ctx.ServerError("GetBranchCommitID", err)

|

|

|

|

|

return nil

|

|

|

|

|

}

|

2020-01-07 20:06:14 +03:00

|

|

|

}

|

|

|

|

|

}

|

2019-06-30 10:57:59 +03:00

|

|

|

|

2020-01-25 05:48:22 +03:00

|

|

|

if headBranchExist {

|

2022-01-20 02:26:57 +03:00

|

|

|

var err error

|

2022-04-28 14:48:48 +03:00

|

|

|

ctx.Data["UpdateAllowed"], ctx.Data["UpdateByRebaseAllowed"], err = pull_service.IsUserAllowedToUpdate(ctx, pull, ctx.Doer)

|

2020-01-25 05:48:22 +03:00

|

|

|

if err != nil {

|

|

|

|

|

ctx.ServerError("IsUserAllowedToUpdate", err)

|

|

|

|

|

return nil

|

|

|

|

|

}

|

2022-01-20 02:26:57 +03:00

|

|

|

ctx.Data["GetCommitMessages"] = pull_service.GetSquashMergeCommitMessages(ctx, pull)

|

2022-08-03 07:56:59 +03:00

|

|

|

} else {

|

|

|

|

|

ctx.Data["GetCommitMessages"] = ""

|

2020-01-25 05:48:22 +03:00

|

|

|

}

|

|

|

|

|

|

2020-01-07 20:06:14 +03:00

|

|

|

sha, err := baseGitRepo.GetRefCommitID(pull.GetGitRefName())

|

|

|

|

|

if err != nil {

|

2020-06-08 21:07:41 +03:00

|

|

|

if git.IsErrNotExist(err) {

|

|

|

|

|

ctx.Data["IsPullRequestBroken"] = true

|

|

|

|

|

if pull.IsSameRepo() {

|

|

|

|

|

ctx.Data["HeadTarget"] = pull.HeadBranch

|

|

|

|

|

} else if pull.HeadRepo == nil {

|

2023-08-04 01:07:15 +03:00

|

|

|

ctx.Data["HeadTarget"] = ctx.Locale.Tr("repo.pull.deleted_branch", pull.HeadBranch)

|

2020-06-08 21:07:41 +03:00

|

|

|

} else {

|

|

|

|

|

ctx.Data["HeadTarget"] = pull.HeadRepo.OwnerName + ":" + pull.HeadBranch

|

|

|

|

|

}

|

|

|

|

|

ctx.Data["BaseTarget"] = pull.BaseBranch

|

|

|

|

|

ctx.Data["NumCommits"] = 0

|

|

|

|

|

ctx.Data["NumFiles"] = 0

|

|

|

|

|

return nil

|

|

|

|

|

}

|

2020-01-07 20:06:14 +03:00

|

|

|

ctx.ServerError(fmt.Sprintf("GetRefCommitID(%s)", pull.GetGitRefName()), err)

|

|

|

|

|

return nil

|

|

|

|

|

}

|

|

|

|

|

|

2024-03-22 15:53:52 +03:00

|

|

|

commitStatuses, _, err := git_model.GetLatestCommitStatus(ctx, repo.ID, sha, db.ListOptionsAll)

|

2020-01-07 20:06:14 +03:00

|

|

|

if err != nil {

|

|

|

|

|

ctx.ServerError("GetLatestCommitStatus", err)

|

|

|

|

|

return nil

|

|

|

|

|

}

|

2024-07-28 18:11:40 +03:00

|

|

|

if !ctx.Repo.CanRead(unit.TypeActions) {

|

|

|

|

|

git_model.CommitStatusesHideActionsURL(ctx, commitStatuses)

|

|

|

|

|

}

|

|

|

|

|

|

2020-01-07 20:06:14 +03:00

|

|

|

if len(commitStatuses) > 0 {

|

|

|

|

|

ctx.Data["LatestCommitStatuses"] = commitStatuses

|

2022-06-12 18:51:54 +03:00

|

|

|

ctx.Data["LatestCommitStatus"] = git_model.CalcCommitStatus(commitStatuses)

|

2020-01-07 20:06:14 +03:00

|

|

|

}

|

2019-09-18 08:39:45 +03:00

|

|

|

|

2023-01-16 11:00:22 +03:00

|

|

|

if pb != nil && pb.EnableStatusCheck {

|

2024-02-19 12:57:08 +03:00

|

|

|

var missingRequiredChecks []string

|

|

|

|

|

for _, requiredContext := range pb.StatusCheckContexts {

|

|

|

|

|

contextFound := false

|

|

|

|

|

matchesRequiredContext := createRequiredContextMatcher(requiredContext)

|

|

|

|

|

for _, presentStatus := range commitStatuses {

|

|

|

|

|

if matchesRequiredContext(presentStatus.Context) {

|

|

|

|

|

contextFound = true

|

|

|

|

|

break

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

if !contextFound {

|

|

|

|

|

missingRequiredChecks = append(missingRequiredChecks, requiredContext)

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

ctx.Data["MissingRequiredChecks"] = missingRequiredChecks

|

|

|

|

|

|

2020-01-07 20:06:14 +03:00

|

|

|

ctx.Data["is_context_required"] = func(context string) bool {

|

2023-01-16 11:00:22 +03:00

|

|

|

for _, c := range pb.StatusCheckContexts {

|

2023-12-22 16:29:50 +03:00

|

|

|

if c == context {

|

|

|

|

|

return true

|

|

|

|

|

}

|

|

|

|

|

if gp, err := glob.Compile(c); err != nil {

|

|

|

|

|

// All newly created status_check_contexts are checked to ensure they are valid glob expressions before being stored in the database.

|

|

|

|

|

// But some old status_check_context created before glob was introduced may be invalid glob expressions.

|

|

|

|

|

// So log the error here for debugging.

|

|

|

|

|

log.Error("compile glob %q: %v", c, err)

|

|

|

|

|

} else if gp.Match(context) {

|

2020-01-07 20:06:14 +03:00

|

|

|

return true

|

2019-09-18 08:39:45 +03:00

|

|

|

}

|

|

|

|

|

}

|

2020-01-07 20:06:14 +03:00

|

|

|

return false

|

2019-06-30 10:57:59 +03:00

|

|

|

}

|

2023-01-16 11:00:22 +03:00

|

|

|

ctx.Data["RequiredStatusCheckState"] = pull_service.MergeRequiredContextsCommitStatus(commitStatuses, pb.StatusCheckContexts)

|

2015-09-02 11:08:05 +03:00

|

|

|

}

|

|

|

|

|

|

2020-01-07 20:06:14 +03:00

|

|

|

ctx.Data["HeadBranchMovedOn"] = headBranchSha != sha

|

|

|

|

|

ctx.Data["HeadBranchCommitID"] = headBranchSha

|

|

|

|

|

ctx.Data["PullHeadCommitID"] = sha

|

|

|

|

|

|

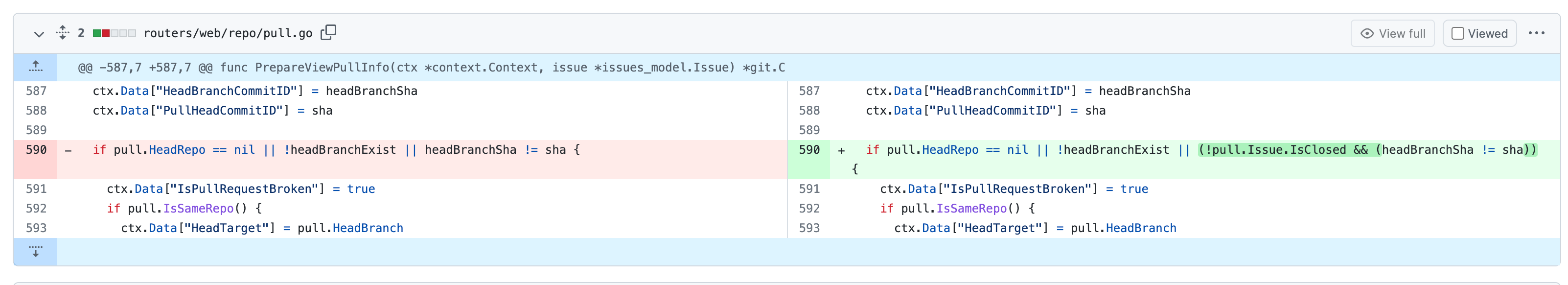

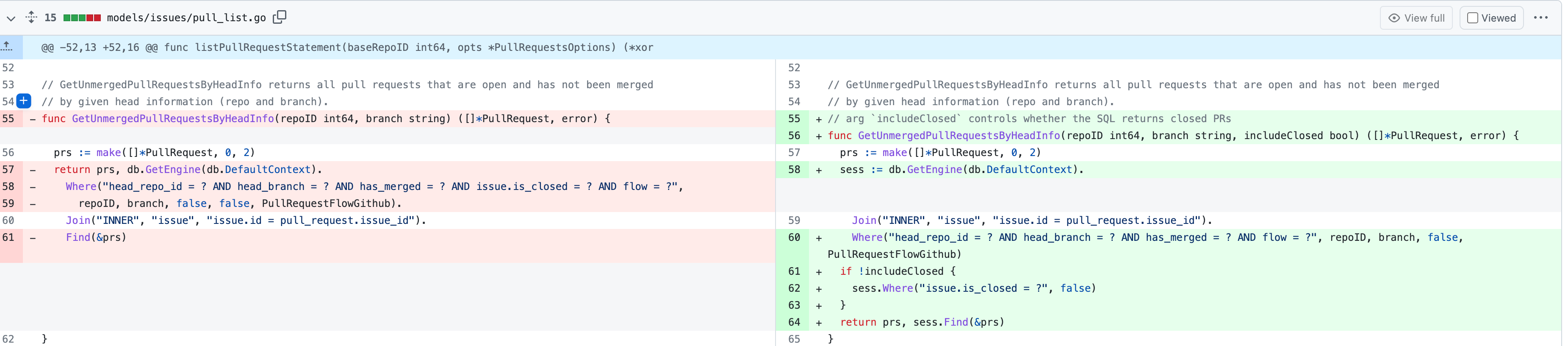

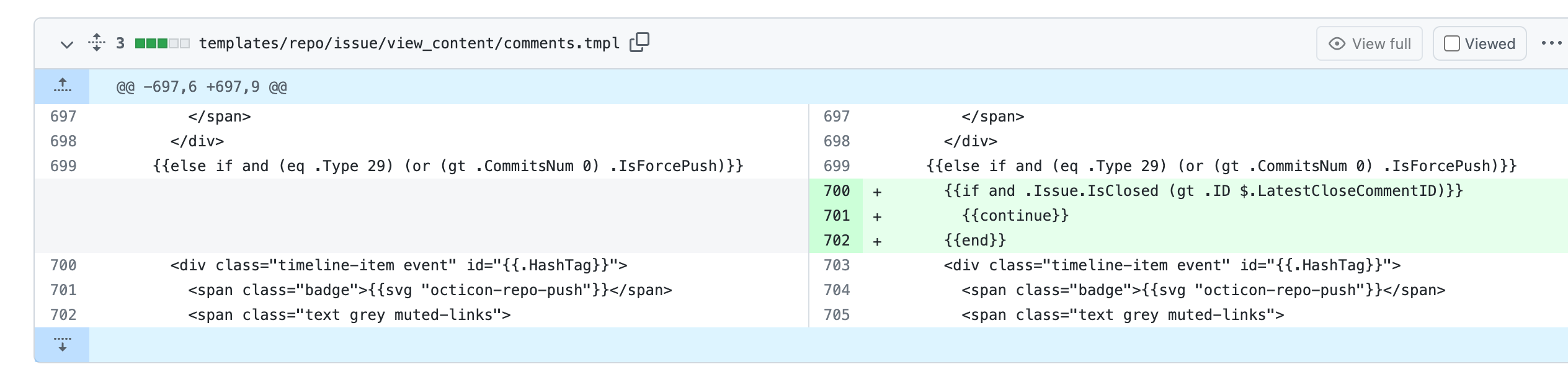

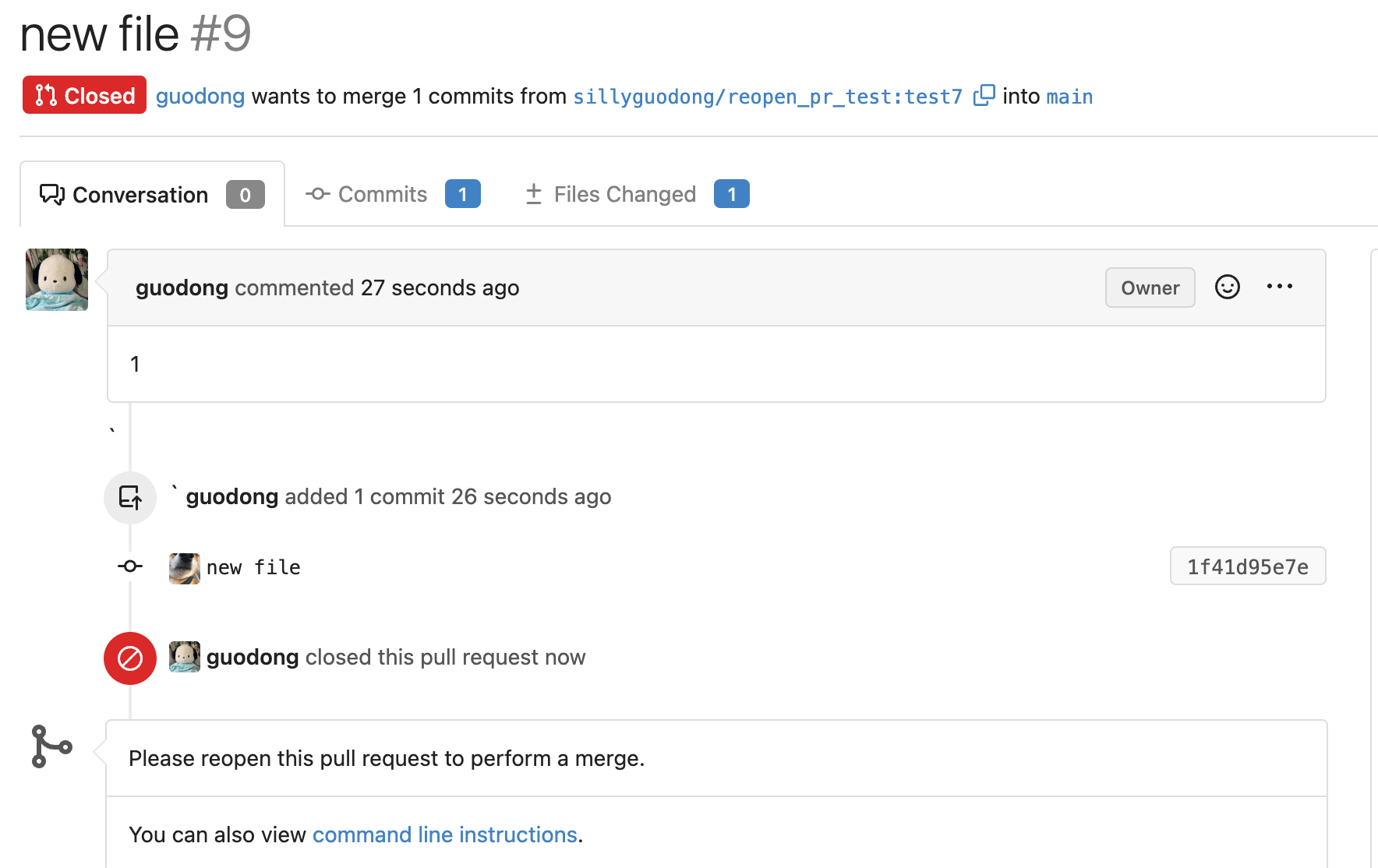

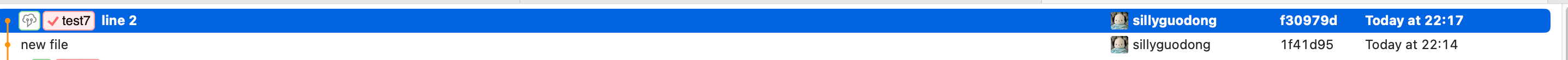

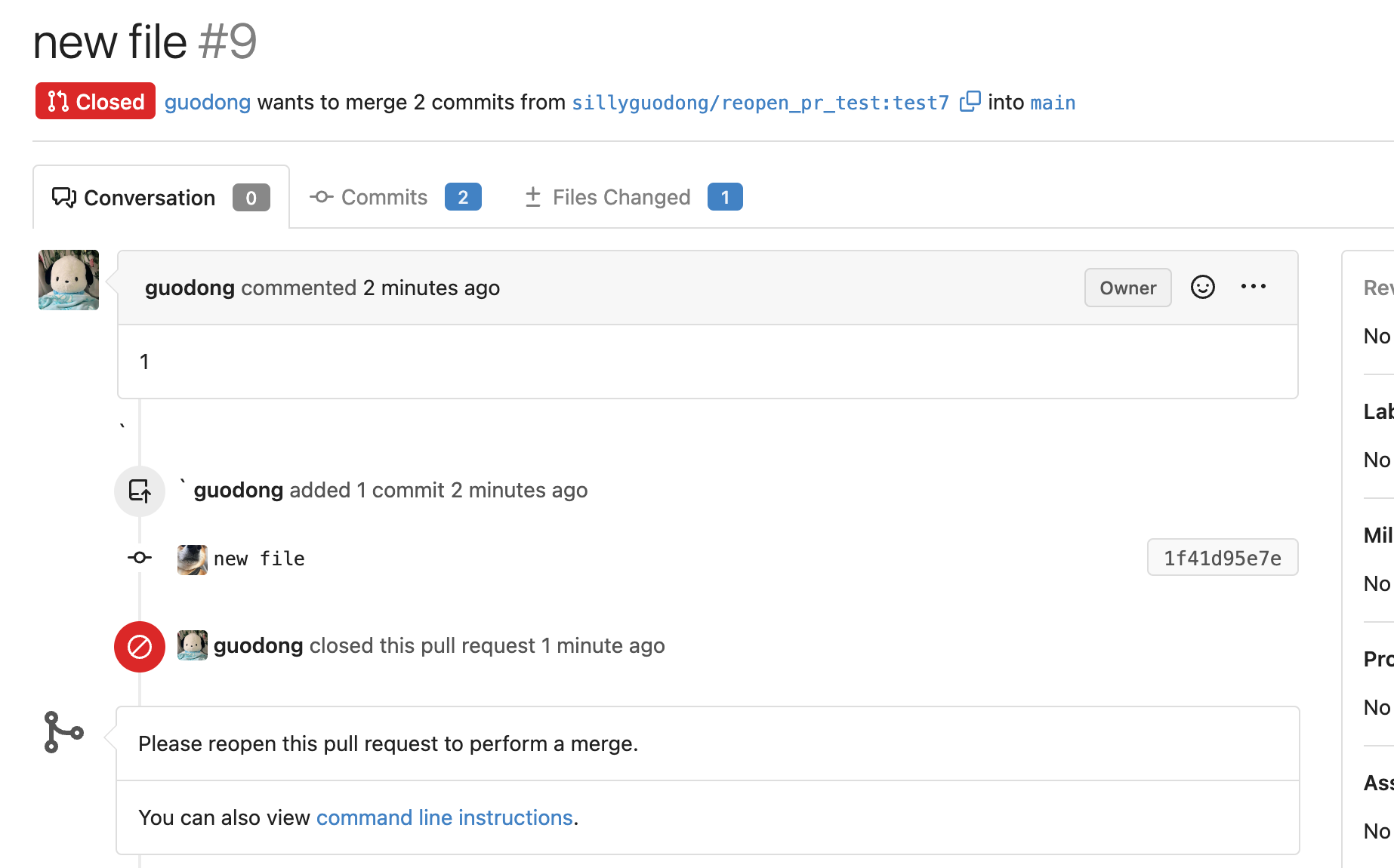

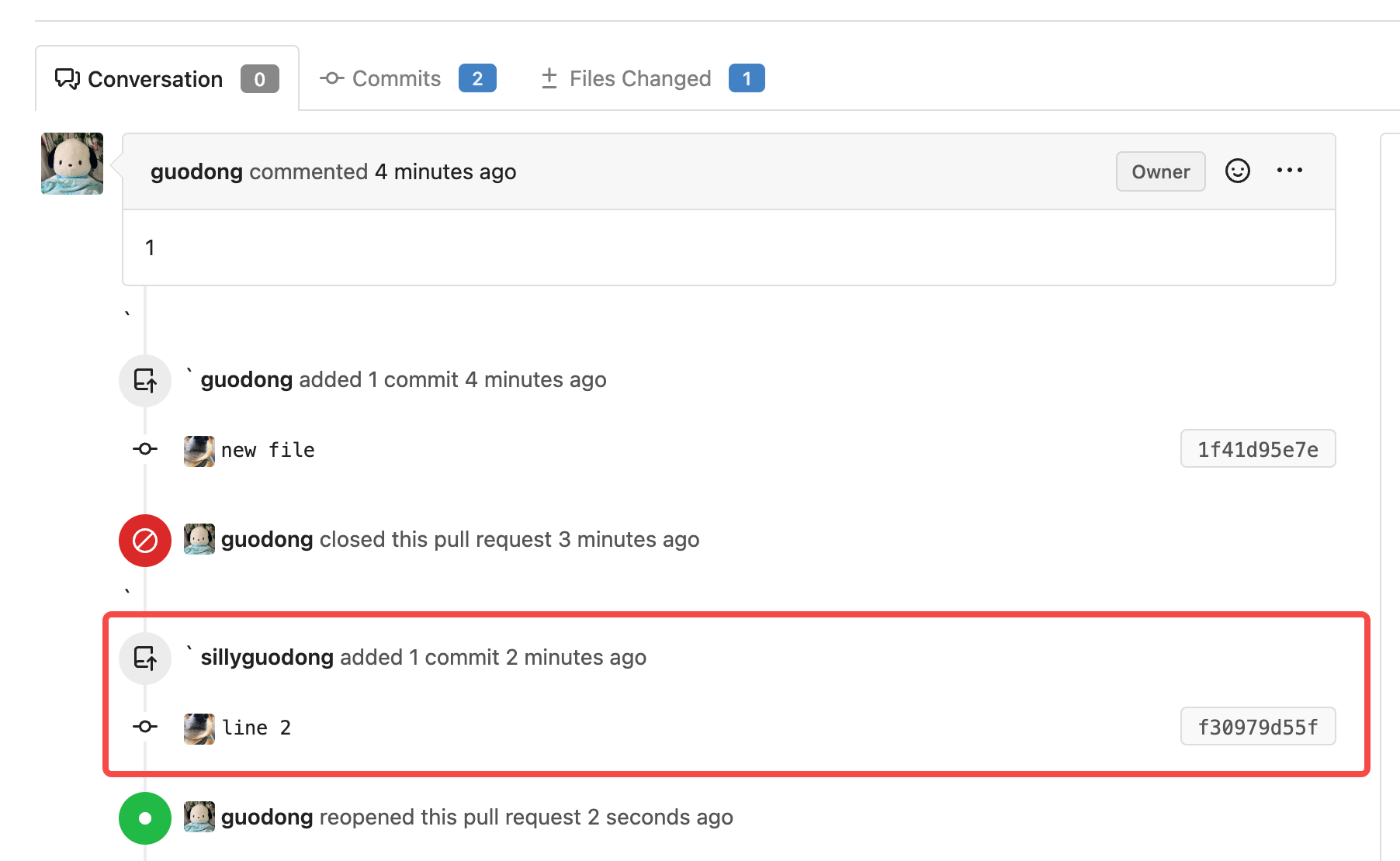

Fix cannot reopen after pushing commits to a closed PR (#23189)

Close: #22784

1. On GH, we can reopen a PR which was closed before after pushing

commits. After reopening PR, we can see the commits that were pushed

after closing PR in the time line. So the case of

[issue](https://github.com/go-gitea/gitea/issues/22784) is a bug which

needs to be fixed.

2. After closing a PR and pushing commits, `headBranchSha` is not equal

to `sha`(which is the last commit ID string of reference). If the

judgement exists, the button of reopen will not display. So, skip the

judgement if the status of PR is closed.

3. Even if PR is already close, we should still insert comment record

into DB when we push commits.

So we should still call function `CreatePushPullComment()`.

https://github.com/go-gitea/gitea/blob/067b0c2664d127c552ccdfd264257caca4907a77/services/pull/pull.go#L260-L282

So, I add a switch(`includeClosed`) to the

`GetUnmergedPullRequestsByHeadInfo` func to control whether the status

of PR must be open. In this case, by setting `includeClosed` to `true`,

we can query the closed PR.

4. In the loop of comments, I use the`latestCloseCommentID` variable to

record the last occurrence of the close comment.

In the go template, if the status of PR is closed, the comments whose

type is `CommentTypePullRequestPush(29)` after `latestCloseCommentID`

won't be rendered.

e.g.

1). The initial status of the PR is opened.

2). Then I click the button of `Close`. PR is closed now.

3). I try to push a commit to this PR, even though its current status is

closed.

But in comments list, this commit do not display.This is as expected :)

4). Click the `Reopen` button, the commit which is pushed after closing

PR display now.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

2023-03-03 16:16:58 +03:00

|

|

|

if pull.HeadRepo == nil || !headBranchExist || (!pull.Issue.IsClosed && (headBranchSha != sha)) {

|

2018-08-01 06:00:35 +03:00

|

|

|

ctx.Data["IsPullRequestBroken"] = true

|

2020-03-03 01:31:55 +03:00

|

|

|

if pull.IsSameRepo() {

|

|

|

|

|

ctx.Data["HeadTarget"] = pull.HeadBranch

|

2020-06-08 21:07:41 +03:00

|

|

|

} else if pull.HeadRepo == nil {

|

2023-08-04 01:07:15 +03:00

|

|

|

ctx.Data["HeadTarget"] = ctx.Locale.Tr("repo.pull.deleted_branch", pull.HeadBranch)

|

2020-03-03 01:31:55 +03:00

|

|

|

} else {

|

2020-06-08 21:07:41 +03:00

|

|

|

ctx.Data["HeadTarget"] = pull.HeadRepo.OwnerName + ":" + pull.HeadBranch

|

2020-03-03 01:31:55 +03:00

|

|

|

}

|

2015-09-02 16:26:56 +03:00

|

|

|

}

|

|

|

|

|

|

2020-01-07 20:06:14 +03:00

|

|

|

compareInfo, err := baseGitRepo.GetCompareInfo(pull.BaseRepo.RepoPath(),

|

2022-01-18 10:45:43 +03:00

|

|

|

git.BranchPrefix+pull.BaseBranch, pull.GetGitRefName(), false, false)

|

2015-09-02 11:08:05 +03:00

|

|

|

if err != nil {

|

2016-07-23 13:35:16 +03:00

|

|

|

if strings.Contains(err.Error(), "fatal: Not a valid object name") {

|

2018-08-01 06:00:35 +03:00

|

|

|

ctx.Data["IsPullRequestBroken"] = true

|

2020-03-03 01:31:55 +03:00

|

|

|

ctx.Data["BaseTarget"] = pull.BaseBranch

|

2016-07-23 13:35:16 +03:00

|

|

|

ctx.Data["NumCommits"] = 0

|

|

|

|

|

ctx.Data["NumFiles"] = 0

|

|

|

|

|

return nil

|

|

|

|

|

}

|

|

|

|

|

|

2019-06-07 23:29:29 +03:00

|

|

|

ctx.ServerError("GetCompareInfo", err)

|

2015-09-02 11:08:05 +03:00

|

|

|

return nil

|

|

|

|

|

}

|

2018-08-13 22:04:39 +03:00

|

|

|

|

2021-07-29 05:32:48 +03:00

|

|

|

if compareInfo.HeadCommitID == compareInfo.MergeBase {

|

|

|

|

|

ctx.Data["IsNothingToCompare"] = true

|

|

|

|

|

}

|

|

|

|

|

|

2023-10-11 07:24:07 +03:00

|

|

|

if pull.IsWorkInProgress(ctx) {

|

2018-08-13 22:04:39 +03:00

|

|

|

ctx.Data["IsPullWorkInProgress"] = true

|

2022-11-19 11:12:33 +03:00

|

|

|

ctx.Data["WorkInProgressPrefix"] = pull.GetWorkInProgressPrefix(ctx)

|

2018-08-13 22:04:39 +03:00

|

|

|

}

|

|

|

|

|

|

2019-02-05 14:54:49 +03:00

|

|

|

if pull.IsFilesConflicted() {

|

|

|

|

|

ctx.Data["IsPullFilesConflicted"] = true

|

|

|

|

|

ctx.Data["ConflictedFiles"] = pull.ConflictedFiles

|

|

|

|

|

}

|

|

|

|

|

|

2021-08-09 21:08:51 +03:00

|

|

|